Most Windows users know that the easiest way to check the size of a folder is to open the folder properties in File Explorer. More experienced users prefer to use third-party tools like TreeSize or WinDirStat. However, if you want to get more detailed statistics on the size of folders in the specific directory or exclude certain file types, you’d better use the PowerShell features. In this article, we’ll show you how to quickly get the size of the specific folder on the disk (and all subfolders) using PowerShell.

You can use the Get-ChildItem (gci alias) and Measure-Object (measure alias) cmdlets to get the sizes of files and folders (including subfolders) in PowerShell. The first cmdlet allows you to get the list of files (with sizes) in the specified directory according to the specified criteria, and the second one performs arithmetic operations.

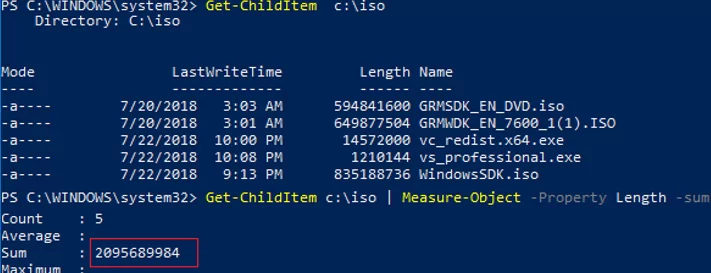

For example, to get the size of the C:\ISO folder, run the following command:

Get-ChildItem C:\ISO | Measure-Object -Property Length -sum

As you can see, the total size of files in this directory is shown in the Sum field and is about 2.1 GB (the size is given in bytes).

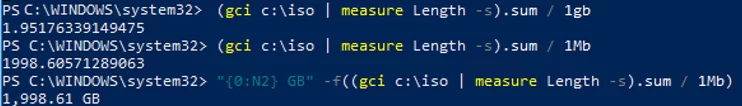

To convert the size into a more convenient MB or GB, use this command:

(gci c:\iso | measure Length -s).sum / 1Gb

Or:

(gci c:\iso | measure Length -s).sum / 1Mb

To round the result to two decimals, run the following command:

"{0:N2} GB" -f ((gci c:\iso | measure Length -s).sum / 1Gb)

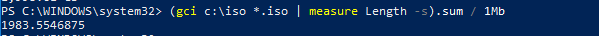

You can use PowerShell to calculate the total size of all files of a certain type in a directory. For example, you want to get the total size of all ISO files in a folder:

(gci c:\iso *.iso | measure Length -s).sum / 1Mb

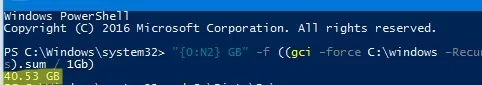

The commands shown above allow you to get only the total size of files in the specified directory. If there are subfolders in the directory, the size of files in the subfolders won’t be calculated. To get the total size of files in the directory including subdirectories, use the –Recurse parameter. Let’s get the total size of files in the C:\Windows folder :

"{0:N2} GB" -f ((gci –force c:\Windows –Recurse -ErrorAction SilentlyContinue| measure Length -s).sum / 1Gb)

To take into account the size of hidden and system files, I have used the –force argument as well.

So the size of C:\Windows on my local drive is about 40 GB (script ignores NTFS compression).

-ErrorAction SilentlyContinue parameter.This script incorrectly calculates the size of a directory if it contains symbolic or hard links. For example, the C:\Windows folder contains many hard links to files in the WinSxS folder (Windows Component Store). As a result, such files can be counted several times. To ignore hard links in the results, use the following command (takes a long time to complete):

"{0:N2} GB" -f ((gci –force C:\windows –Recurse -ErrorAction SilentlyContinue | Where-Object { $_.LinkType -notmatch "HardLink" }| measure Length -s).sum / 1Gb)

As you can see, the actual size of the Windows folder is slightly smaller.

You can use filters to select the files to consider when calculating the final size. For example, you can get the size of files created in 2020:

(gci -force c:\ps –Recurse -ErrorAction SilentlyContinue | ? {$_.CreationTime -gt ‘1/1/20’ -AND $_.CreationTime -lt ‘12/31/20’}| measure Length -s).sum / 1Gb

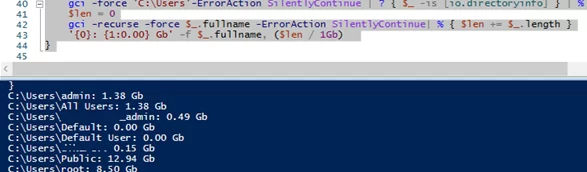

You can get the size of all first-level subfolders in the specified directory. For example, you want to get the size of all user profiles in the folder C:\Users.

gci -force 'C:\Users'-ErrorAction SilentlyContinue | ? { $_ -is [io.directoryinfo] } | % {

$len = 0

gci -recurse -force $_.fullname -ErrorAction SilentlyContinue | % { $len += $_.length }

$_.fullname, '{0:N2} GB' -f ($len / 1Gb)

}

% is an alias for the foreach-object loop.

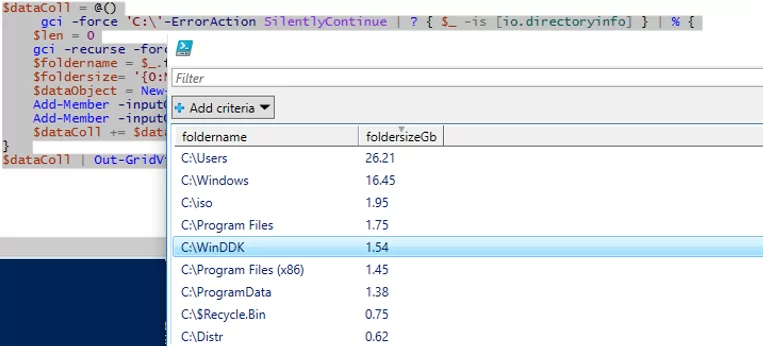

Let’s go on. Suppose, your task is to find the size of each directory in the root of the system hard drive and present the information in the convenient table form for analysis and able to be sorted by the folder size.

To get the information about the size of directories on the system C:\ drive, run the following PowerShell script:

$targetfolder='C:\'

$dataColl = @()

gci -force $targetfolder -ErrorAction SilentlyContinue | ? { $_ -is [io.directoryinfo] } | % {

$len = 0

gci -recurse -force $_.fullname -ErrorAction SilentlyContinue | % { $len += $_.length }

$foldername = $_.fullname

$foldersize= '{0:N2}' -f ($len / 1Gb)

$dataObject = New-Object PSObject

Add-Member -inputObject $dataObject -memberType NoteProperty -name “foldername” -value $foldername

Add-Member -inputObject $dataObject -memberType NoteProperty -name “foldersizeGb” -value $foldersize

$dataColl += $dataObject

}

$dataColl | Out-GridView -Title “Size of subdirectories”

As you can see, the graphic view of the table should appear where all folders in the root of the system drive C:\ and their size are shown (the table is generated by the Out-GridView cmdlet). By clicking the column header, you can sort the folders by size. You can also export the results to CSV (| Export-Csv folder_size.csv) or to an Excel file.

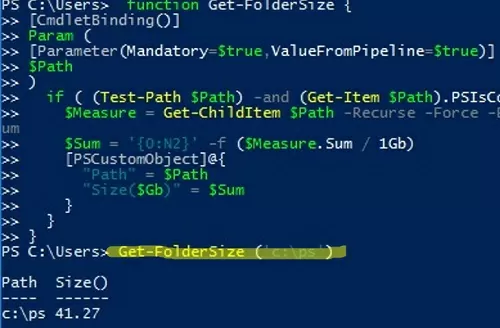

If you are using directory size checking in your PowerShell scripts, you can create a separate function:

function Get-FolderSize {

[CmdletBinding()]

Param (

[Parameter(Mandatory=$true,ValueFromPipeline=$true)]

$Path

)

if ( (Test-Path $Path) -and (Get-Item $Path).PSIsContainer ) {

$Measure = Get-ChildItem $Path -Recurse -Force -ErrorAction SilentlyContinue | Measure-Object -Property Length -Sum

$Sum = '{0:N2}' -f ($Measure.Sum / 1Gb)

[PSCustomObject]@{

"Path" = $Path

"Size($Gb)" = $Sum

}

}

}

To use the function, simply run the command with the folder path as an argument:

Get-FolderSize ('C:\PS')

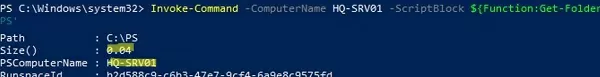

You can use your local PowerShell function to check the folder size on remote computers via the Invoke-Command (PowerShell Remoting) cmdlet.

Invoke-Command -ComputerName hq-srv01 -ScriptBlock ${Function:Get-FolderSize} –ArgumentList 'C:\PS'

21 comments

Great script! Many thanks.

Just adding here: If you want to display the output directly to the screen instead of the grid (for example within a docker container), use:

$dataColl | Write-Output

Thanks for your addition!

Well written article, thanks!

I do have one suggestion, I have to run powershell in a command prompt but can’t run it in a script (running remotely with a tool that doesn’t have access to powershell). The formatting ({0:N2}) and the pipe was not allowing the switches to work. So I had to replace ‘{0:N2}’ with ‘[math]round(…)’. And I had to escape the pipe with a carrot. End result looked like:

[math]round((gci –force c:\Windows –Recurse -ErrorAction SilentlyContinue ^| measure Length -s).sum / 1Gb)

And that got me exactly what I needed. But I wouldn’t have gotten the jump start I needed without this guidance.

Thanks again!

Hello,

How can I add a code line for files?

Thank you

[…] Getting Folder Sizes […]

I add below command to get count result as well but it give only one level of sub directory , it doesn’t count sub sub folder file count , can you help with it

Add-Member -InputObject $dataobject -MemberType NoteProperty -name “count” -Value (getchileitem dir $_.FullName -recurse | Measure-Object).Count

This what I am exactly looking for. One request. How can I export the final result to an .csv.

I did it manually, selected all the lines, copied and pasted it into excel.

It has already pasted separated by columns.

If you have the script to do this automatically it will be better.

Export-Csv “Path to the CSV file”

Last script to show sizes of all subfolders in GUI doesn’t work. Previous script worked, but this just hangs in PS and nothing happens…

Great Script!!! Thank you!

I had to manually delete and place double quotes. So it worked!

Just sorting by size is not working for me. It is classifying as text. It is classifying thus:

97,5

9

80,6

8

But it’s just a detail that doesn’t take away from the script’s merits. Great script!

[…] for an easy way to get the size of all user profiles folder through PowerShell and found this woshub and inspired by it ended with the following function which measures the size of everything in […]

Nice code, it workds

I like the third party tool: Directory Report

It can filter by file types, modification date, size and owner

It can save its output to many file types including directly to MS-Excel

Sorting fix:

$targetfolder=’C:\’

$dataColl = @()

gci -force $targetfolder -ErrorAction SilentlyContinue | ? { $_ -is [io.directoryinfo] } | % {

$len = 0

gci -recurse -force $_.fullname -ErrorAction SilentlyContinue | % { $len += $_.length }

$foldername = $_.fullname

$foldersize= [math]::Round(($len / 1Gb),2)

$foldersizeint = [int]$foldersize

$dataObject = New-Object PSObject

Add-Member -inputObject $dataObject -memberType NoteProperty -name “foldername” -value $foldername

Add-Member -inputObject $dataObject -memberType NoteProperty -name “foldersizeGb” -value $foldersize

$dataColl += $dataObject

}

$dataColl | Out-GridView -Title “Size of subdirectories”

Unbelievable, a basic daily task needs…. a program? well done MS!! What’s next?

Will you replace the “cls” with a 20-line program to clean the screen?

Actually, cls is an alias to Clear-Host function.

you can find it with this command

Get-Command cls:

CommandType Name Version Source

———– —- ——- ——

Alias cls -> Clear-Host

and get Clear-Host function definition body using this:

Get-Command Clear-Host | select -ExpandProperty Definition

$RawUI = $Host.UI.RawUI

$RawUI.CursorPosition = @{X=0;Y=0}

$RawUI.SetBufferContents(

@{Top = -1; Bottom = -1; Right = -1; Left = -1},

@{Character = ‘ ‘; ForegroundColor = $rawui.ForegroundColor; BackgroundColor = $rawui.BackgroundColor})

# .Link

# https://go.microsoft.com/fwlink/?LinkID=2096480

# .ExternalHelp System.Management.Automation.dll-help.xml

Without its comments, It’s five-line codes.

But I think get folder size is so useful that PowerShell can include a command for it.

If you are on a file system that has big cluster size or are trying to find length with byte precision you can’t do that with this script. The cluster size will become your minimum size and all other sizes will be multiples of this.

Unfortunately there is no way in PowerShell to do the same as you do it in explorer. Or at least nobody knows of such.

Is it possible to add a member type for file count in each folder? This is perfect and edited a tiny bit to match what we need for planning file server migrations (well at least the planning)